llms-from-scratch

"This repository contains the code for developing, pretraining, and finetuning a GPT-like LLM and is the official code repository for the book Build a Large Language Model (From Scratch). In *Build a Large Language Model (From Scratch)*, you'll learn and understand how large language models (LLMs) wo..."

⚡ Quick Commands

huggingface-cli download rasbt/llms-from-scratch pip install -U transformers Engineering Specs

⚡ Hardware

🧠 Lifecycle

🌐 Identity

📈 Interest Trend

Real-time Trend Indexing In-Progress

* Real-time activity index across HuggingFace, GitHub and Research citations.

No similar models found.

Social Proof

🔬Technical Deep Dive

Full Specifications [+]▾

🚀 What's Next?

🖼️ Visual Gallery

3 Images Detected⚡ Quick Commands

huggingface-cli download rasbt/llms-from-scratch pip install -U transformers Hardware Compatibility

Multi-Tier Validation Matrix

RTX 3060 / 4060 Ti

RTX 4070 Super

RTX 4080 / Mac M3

RTX 3090 / 4090

RTX 6000 Ada

A100 / H100

Pro Tip: Compatibility is estimated for 4-bit quantization (Q4). High-precision (FP16) or ultra-long context windows will significantly increase VRAM requirements.

README

Build a Large Language Model (From Scratch)

This repository contains the code for developing, pretraining, and finetuning a GPT-like LLM and is the official code repository for the book Build a Large Language Model (From Scratch).

In Build a Large Language Model (From Scratch), you'll learn and understand how large language models (LLMs) work from the inside out by coding them from the ground up, step by step. In this book, I'll guide you through creating your own LLM, explaining each stage with clear text, diagrams, and examples.

The method described in this book for training and developing your own small-but-functional model for educational purposes mirrors the approach used in creating large-scale foundational models such as those behind ChatGPT. In addition, this book includes code for loading the weights of larger pretrained models for finetuning.

- Link to the official source code repository

- Link to the book at Manning (the publisher's website)

- Link to the book page on Amazon.com

- ISBN 9781633437166

To download a copy of this repository, click on the Download ZIP button or execute the following command in your terminal:

git clone --depth 1 https://github.com/rasbt/LLMs-from-scratch.git(If you downloaded the code bundle from the Manning website, please consider visiting the official code repository on GitHub at https://github.com/rasbt/LLMs-from-scratch for the latest updates.)

Table of Contents

Please note that this README.md file is a Markdown (.md) file. If you have downloaded this code bundle from the Manning website and are viewing it on your local computer, I recommend using a Markdown editor or previewer for proper viewing. If you haven't installed a Markdown editor yet, Ghostwriter is a good free option.

You can alternatively view this and other files on GitHub at https://github.com/rasbt/LLMs-from-scratch in your browser, which renders Markdown automatically.

Tip: If you're seeking guidance on installing Python and Python packages and setting up your code environment, I suggest reading the README.md file located in the setup directory.

| Chapter Title | Main Code (for Quick Access) | All Code + Supplementary |

|---|---|---|

| Setup recommendations How to best read this book |

- | - |

| Ch 1: Understanding Large Language Models | No code | - |

| Ch 2: Working with Text Data | - ch02.ipynb - dataloader.ipynb (summary) - exercise-solutions.ipynb |

./ch02 |

| Ch 3: Coding Attention Mechanisms | - ch03.ipynb - multihead-attention.ipynb (summary) - exercise-solutions.ipynb |

./ch03 |

| Ch 4: Implementing a GPT Model from Scratch | - ch04.ipynb - gpt.py (summary) - exercise-solutions.ipynb |

./ch04 |

| Ch 5: Pretraining on Unlabeled Data | - ch05.ipynb - gpt_train.py (summary) - gpt_generate.py (summary) - exercise-solutions.ipynb |

./ch05 |

| Ch 6: Finetuning for Text Classification | - ch06.ipynb - gpt_class_finetune.py - exercise-solutions.ipynb |

./ch06 |

| Ch 7: Finetuning to Follow Instructions | - ch07.ipynb - gpt_instruction_finetuning.py (summary) - ollama_evaluate.py (summary) - exercise-solutions.ipynb |

./ch07 |

| Appendix A: Introduction to PyTorch | - code-part1.ipynb - code-part2.ipynb - DDP-script.py - exercise-solutions.ipynb |

./appendix-A |

| Appendix B: References and Further Reading | No code | ./appendix-B |

| Appendix C: Exercise Solutions | - list of exercise solutions | ./appendix-C |

| Appendix D: Adding Bells and Whistles to the Training Loop | - appendix-D.ipynb | ./appendix-D |

| Appendix E: Parameter-efficient Finetuning with LoRA | - appendix-E.ipynb | ./appendix-E |

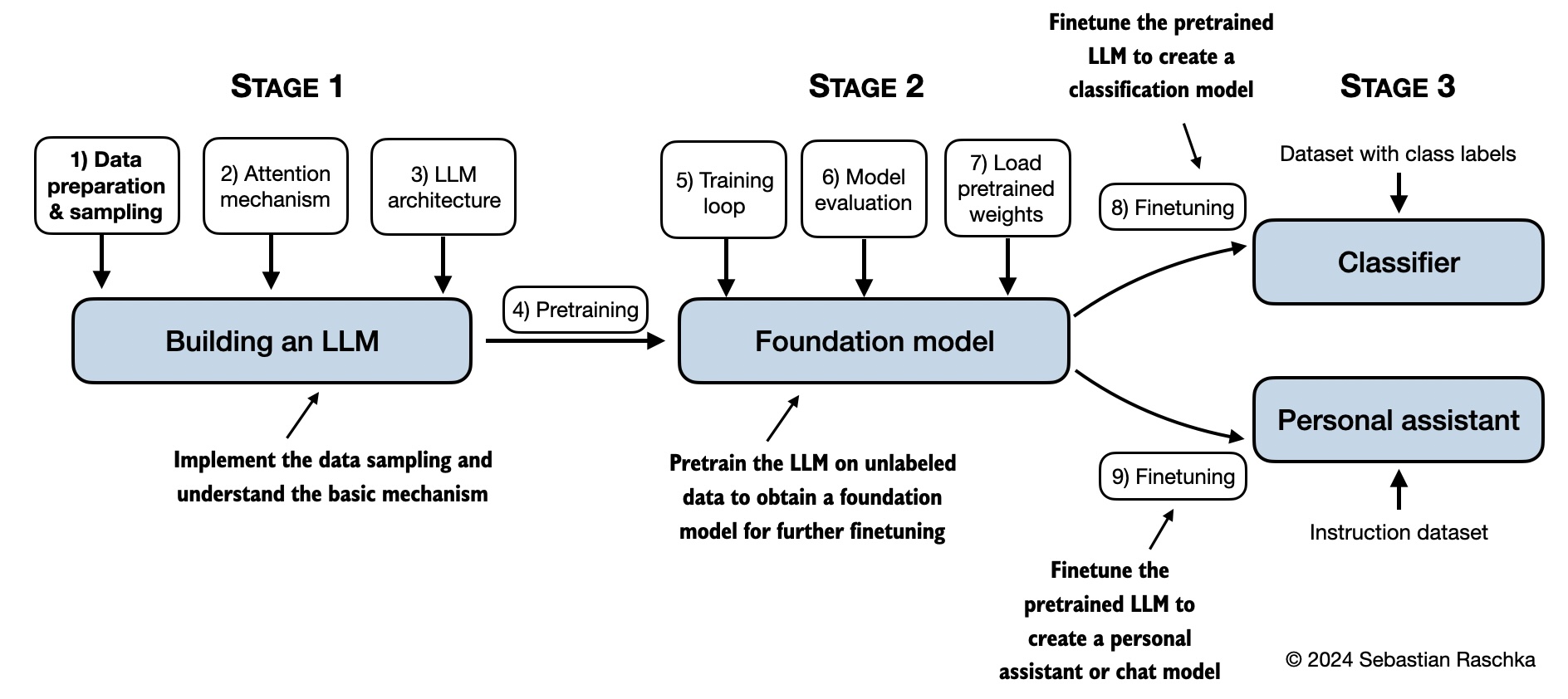

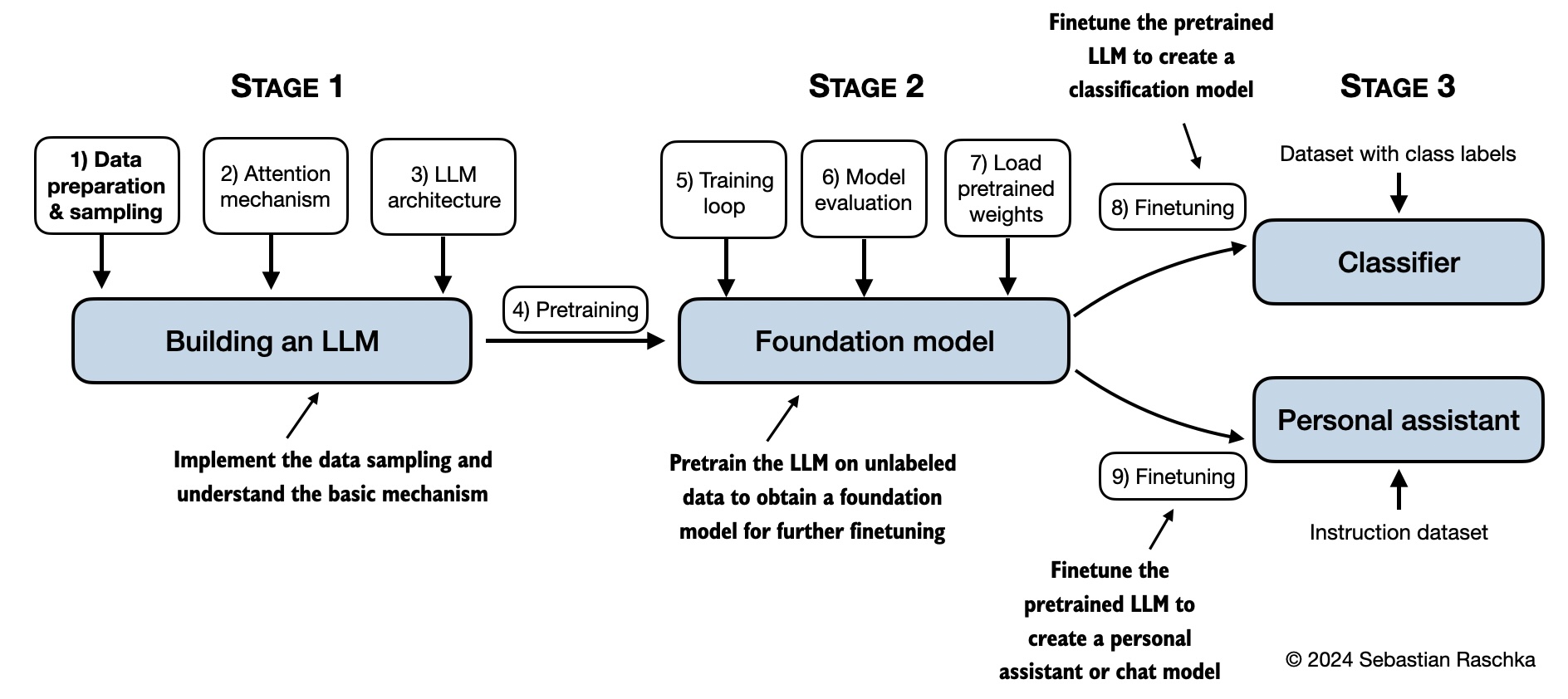

The mental model below summarizes the contents covered in this book.

Prerequisites

The most important prerequisite is a strong foundation in Python programming. With this knowledge, you will be well prepared to explore the fascinating world of LLMs and understand the concepts and code examples presented in this book.

If you have some experience with deep neural networks, you may find certain concepts more familiar, as LLMs are built upon these architectures.

This book uses PyTorch to implement the code from scratch without using any external LLM libraries. While proficiency in PyTorch is not a prerequisite, familiarity with PyTorch basics is certainly useful. If you are new to PyTorch, Appendix A provides a concise introduction to PyTorch. Alternatively, you may find my book, PyTorch in One Hour: From Tensors to Training Neural Networks on Multiple GPUs, helpful for learning about the essentials.

Hardware Requirements

The code in the main chapters of this book is designed to run on conventional laptops within a reasonable timeframe and does not require specialized hardware. This approach ensures that a wide audience can engage with the material. Additionally, the code automatically utilizes GPUs if they are available. (Please see the setup doc for additional recommendations.)

Video Course

A 17-hour and 15-minute companion video course where I code through each chapter of the book. The course is organized into chapters and sections that mirror the book's structure so that it can be used as a standalone alternative to the book or complementary code-along resource.

Companion Book / Sequel

Build A Reasoning Model (From Scratch), while a standalone book, can be considered as a sequel to Build A Large Language Model (From Scratch).

It starts with a pretrained model and implements different reasoning approaches, including inference-time scaling, reinforcement learning, and distillation, to improve the model's reasoning capabilities.

Similar to Build A Large Language Model (From Scratch), Build A Reasoning Model (From Scratch) takes a hands-on approach implementing these methods from scratch.

- Amazon link (TBD)

- Manning link

- GitHub repository

Exercises

Each chapter of the book includes several exercises. The solutions are summarized in Appendix C, and the corresponding code notebooks are available in the main chapter folders of this repository (for example, ./ch02/01_main-chapter-code/exercise-solutions.ipynb.

In addition to the code exercises, you can download a free 170-page PDF titled Test Yourself On Build a Large Language Model (From Scratch) from the Manning website. It contains approximately 30 quiz questions and solutions per chapter to help you test your understanding.

Bonus Material

Several folders contain optional materials as a bonus for interested readers:

Setup

Chapter 2: Working With Text Data

Chapter 3: Coding Attention Mechanisms

Chapter 4: Implementing a GPT Model From Scratch

Chapter 5: Pretraining on Unlabeled Data

- Alternative Weight Loading Methods

- Pretraining GPT on the Project Gutenberg Dataset

- Adding Bells and Whistles to the Training Loop

- Optimizing Hyperparameters for Pretraining

- Building a User Interface to Interact With the Pretrained LLM

- Converting GPT to Llama

- Memory-efficient Model Weight Loading

- Extending the Tiktoken BPE Tokenizer with New Tokens

- PyTorch Performance Tips for Faster LLM Training

- LLM Architectures

Chapter 6: Finetuning for classification

Chapter 7: Finetuning to follow instructions

- Dataset Utilities for Finding Near Duplicates and Creating Passive Voice Entries

- Evaluating Instruction Responses Using the OpenAI API and Ollama

- Generating a Dataset for Instruction Finetuning

- Improving a Dataset for Instruction Finetuning

- Generating a Preference Dataset With Llama 3.1 70B and Ollama

- Direct Preference Optimization (DPO) for LLM Alignment

- Building a User Interface to Interact With the Instruction-Finetuned GPT Model

More bonus material from the Reasoning From Scratch repository:

Qwen3 (From Scratch) Basics

Evaluation

Questions, Feedback, and Contributing to This Repository

I welcome all sorts of feedback, best shared via the Manning Forum or GitHub Discussions. Likewise, if you have any questions or just want to bounce ideas off others, please don't hesitate to post these in the forum as well.

Please note that since this repository contains the code corresponding to a print book, I currently cannot accept contributions that would extend the contents of the main chapter code, as it would introduce deviations from the physical book. Keeping it consistent helps ensure a smooth experience for everyone.

Citation

If you find this book or code useful for your research, please consider citing it.

Chicago-style citation:

Raschka, Sebastian. Build A Large Language Model (From Scratch). Manning, 2024. ISBN: 978-1633437166.

BibTeX entry:

@book{build-llms-from-scratch-book,

author = {Sebastian Raschka},

title = {Build A Large Language Model (From Scratch)},

publisher = {Manning},

year = {2024},

isbn = {978-1633437166},

url = {https://www.manning.com/books/build-a-large-language-model-from-scratch},

github = {https://github.com/rasbt/LLMs-from-scratch}

}17,928 chars • Full Disclosure Protocol Active

Build a Large Language Model (From Scratch)

This repository contains the code for developing, pretraining, and finetuning a GPT-like LLM and is the official code repository for the book Build a Large Language Model (From Scratch).

In Build a Large Language Model (From Scratch), you'll learn and understand how large language models (LLMs) work from the inside out by coding them from the ground up, step by step. In this book, I'll guide you through creating your own LLM, explaining each stage with clear text, diagrams, and examples.

The method described in this book for training and developing your own small-but-functional model for educational purposes mirrors the approach used in creating large-scale foundational models such as those behind ChatGPT. In addition, this book includes code for loading the weights of larger pretrained models for finetuning.

- Link to the official source code repository

- Link to the book at Manning (the publisher's website)

- Link to the book page on Amazon.com

- ISBN 9781633437166

To download a copy of this repository, click on the Download ZIP button or execute the following command in your terminal:

git clone --depth 1 https://github.com/rasbt/LLMs-from-scratch.git(If you downloaded the code bundle from the Manning website, please consider visiting the official code repository on GitHub at https://github.com/rasbt/LLMs-from-scratch for the latest updates.)

Table of Contents

Please note that this README.md file is a Markdown (.md) file. If you have downloaded this code bundle from the Manning website and are viewing it on your local computer, I recommend using a Markdown editor or previewer for proper viewing. If you haven't installed a Markdown editor yet, Ghostwriter is a good free option.

You can alternatively view this and other files on GitHub at https://github.com/rasbt/LLMs-from-scratch in your browser, which renders Markdown automatically.

Tip: If you're seeking guidance on installing Python and Python packages and setting up your code environment, I suggest reading the README.md file located in the setup directory.

| Chapter Title | Main Code (for Quick Access) | All Code + Supplementary |

|---|---|---|

| Setup recommendations How to best read this book |

- | - |

| Ch 1: Understanding Large Language Models | No code | - |

| Ch 2: Working with Text Data | - ch02.ipynb - dataloader.ipynb (summary) - exercise-solutions.ipynb |

./ch02 |

| Ch 3: Coding Attention Mechanisms | - ch03.ipynb - multihead-attention.ipynb (summary) - exercise-solutions.ipynb |

./ch03 |

| Ch 4: Implementing a GPT Model from Scratch | - ch04.ipynb - gpt.py (summary) - exercise-solutions.ipynb |

./ch04 |

| Ch 5: Pretraining on Unlabeled Data | - ch05.ipynb - gpt_train.py (summary) - gpt_generate.py (summary) - exercise-solutions.ipynb |

./ch05 |

| Ch 6: Finetuning for Text Classification | - ch06.ipynb - gpt_class_finetune.py - exercise-solutions.ipynb |

./ch06 |

| Ch 7: Finetuning to Follow Instructions | - ch07.ipynb - gpt_instruction_finetuning.py (summary) - ollama_evaluate.py (summary) - exercise-solutions.ipynb |

./ch07 |

| Appendix A: Introduction to PyTorch | - code-part1.ipynb - code-part2.ipynb - DDP-script.py - exercise-solutions.ipynb |

./appendix-A |

| Appendix B: References and Further Reading | No code | ./appendix-B |

| Appendix C: Exercise Solutions | - list of exercise solutions | ./appendix-C |

| Appendix D: Adding Bells and Whistles to the Training Loop | - appendix-D.ipynb | ./appendix-D |

| Appendix E: Parameter-efficient Finetuning with LoRA | - appendix-E.ipynb | ./appendix-E |

The mental model below summarizes the contents covered in this book.

Prerequisites

The most important prerequisite is a strong foundation in Python programming. With this knowledge, you will be well prepared to explore the fascinating world of LLMs and understand the concepts and code examples presented in this book.

If you have some experience with deep neural networks, you may find certain concepts more familiar, as LLMs are built upon these architectures.

This book uses PyTorch to implement the code from scratch without using any external LLM libraries. While proficiency in PyTorch is not a prerequisite, familiarity with PyTorch basics is certainly useful. If you are new to PyTorch, Appendix A provides a concise introduction to PyTorch. Alternatively, you may find my book, PyTorch in One Hour: From Tensors to Training Neural Networks on Multiple GPUs, helpful for learning about the essentials.

Hardware Requirements

The code in the main chapters of this book is designed to run on conventional laptops within a reasonable timeframe and does not require specialized hardware. This approach ensures that a wide audience can engage with the material. Additionally, the code automatically utilizes GPUs if they are available. (Please see the setup doc for additional recommendations.)

Video Course

A 17-hour and 15-minute companion video course where I code through each chapter of the book. The course is organized into chapters and sections that mirror the book's structure so that it can be used as a standalone alternative to the book or complementary code-along resource.

Companion Book / Sequel

Build A Reasoning Model (From Scratch), while a standalone book, can be considered as a sequel to Build A Large Language Model (From Scratch).

It starts with a pretrained model and implements different reasoning approaches, including inference-time scaling, reinforcement learning, and distillation, to improve the model's reasoning capabilities.

Similar to Build A Large Language Model (From Scratch), Build A Reasoning Model (From Scratch) takes a hands-on approach implementing these methods from scratch.

- Amazon link (TBD)

- Manning link

- GitHub repository

Exercises

Each chapter of the book includes several exercises. The solutions are summarized in Appendix C, and the corresponding code notebooks are available in the main chapter folders of this repository (for example, ./ch02/01_main-chapter-code/exercise-solutions.ipynb.

In addition to the code exercises, you can download a free 170-page PDF titled Test Yourself On Build a Large Language Model (From Scratch) from the Manning website. It contains approximately 30 quiz questions and solutions per chapter to help you test your understanding.

Bonus Material

Several folders contain optional materials as a bonus for interested readers:

Setup

Chapter 2: Working With Text Data

Chapter 3: Coding Attention Mechanisms

Chapter 4: Implementing a GPT Model From Scratch

Chapter 5: Pretraining on Unlabeled Data

- Alternative Weight Loading Methods

- Pretraining GPT on the Project Gutenberg Dataset

- Adding Bells and Whistles to the Training Loop

- Optimizing Hyperparameters for Pretraining

- Building a User Interface to Interact With the Pretrained LLM

- Converting GPT to Llama

- Memory-efficient Model Weight Loading

- Extending the Tiktoken BPE Tokenizer with New Tokens

- PyTorch Performance Tips for Faster LLM Training

- LLM Architectures

Chapter 6: Finetuning for classification

Chapter 7: Finetuning to follow instructions

- Dataset Utilities for Finding Near Duplicates and Creating Passive Voice Entries

- Evaluating Instruction Responses Using the OpenAI API and Ollama

- Generating a Dataset for Instruction Finetuning

- Improving a Dataset for Instruction Finetuning

- Generating a Preference Dataset With Llama 3.1 70B and Ollama

- Direct Preference Optimization (DPO) for LLM Alignment

- Building a User Interface to Interact With the Instruction-Finetuned GPT Model

More bonus material from the Reasoning From Scratch repository:

Qwen3 (From Scratch) Basics

Evaluation

Questions, Feedback, and Contributing to This Repository

I welcome all sorts of feedback, best shared via the Manning Forum or GitHub Discussions. Likewise, if you have any questions or just want to bounce ideas off others, please don't hesitate to post these in the forum as well.

Please note that since this repository contains the code corresponding to a print book, I currently cannot accept contributions that would extend the contents of the main chapter code, as it would introduce deviations from the physical book. Keeping it consistent helps ensure a smooth experience for everyone.

Citation

If you find this book or code useful for your research, please consider citing it.

Chicago-style citation:

Raschka, Sebastian. Build A Large Language Model (From Scratch). Manning, 2024. ISBN: 978-1633437166.

BibTeX entry:

@book{build-llms-from-scratch-book,

author = {Sebastian Raschka},

title = {Build A Large Language Model (From Scratch)},

publisher = {Manning},

year = {2024},

isbn = {978-1633437166},

url = {https://www.manning.com/books/build-a-large-language-model-from-scratch},

github = {https://github.com/rasbt/LLMs-from-scratch}

}📝 Limitations & Considerations

- • Benchmark scores may vary based on evaluation methodology and hardware configuration.

- • VRAM requirements are estimates; actual usage depends on quantization and batch size.

- • FNI scores are relative rankings and may change as new models are added.

- ⚠ License Unknown: Verify licensing terms before commercial use.

- • Source: Unknown

Cite this model

Academic & Research Attribution

@misc{hf_model__rasbt__llms_from_scratch,

author = {rasbt},

title = {undefined Model},

year = {2026},

howpublished = {\url{https://huggingface.co/rasbt/llms-from-scratch}},

note = {Accessed via Free2AITools Knowledge Fortress}

}AI Summary: Based on Hugging Face metadata. Not a recommendation.

🛡️ Model Transparency Report

Verified data manifest for traceability and transparency.

🆔 Identity & Source

- id

- hf-model--rasbt--llms-from-scratch

- author

- rasbt

- tags

- aiartificial-intelligencechatbotchatgptdeep-learningfrom-scratchgenerative-aigptlanguage-modellarge-language-modelsllmmachine-learningneural-networkspythonpytorchtransformersjupyter notebook

⚙️ Technical Specs

- architecture

- MoE

- params billions

- null

- context length

- null

📊 Engagement & Metrics

- likes

- 80,750

- downloads

- 0

Free2AITools Constitutional Data Pipeline: Curated disclosure mode active. (V15.x Standard)